Mapping based on the data amount is a common requirement for FDM. As an example, if the amount is positive it should be mapped to the debtor account, if it’s negative it should be mapped to the creditor account. I have done this in a number of different ways depending on the requirements and this is listing the different methods I have used. I am not suggesting this is an exhaustive list; I am sure there are other ways of doing it.

This is just an overview that is meant to give you some ideas on how to approach mapping by amount. The scripts illustrate the point I am trying to make; you will need to edit and add to them to get them to work for your own application.

I will be covering four different ways of doing this:

| Description… | Advantages… | Disadvantages… | Applies to… | |

|

Write a script against the map |

|

|

Individual records |

|

Create a logic account based on the record value |

|

|

Accumulated records |

|

Edit the account label as the record is imported |

|

|

Individual records |

|

Apply the maps for the negative records after the map process has completed |

|

|

Individual and Accumulated records |

‘Audit trail lost’

This means that the metadata has been changed in some way and is now not the same as the source records in the ledgers. While this might be acceptable for Management applications, it will probably be flagged as an issue for Statutory. It also means that, if you are using ERPi in conjunction with FDM, you cannot drill back to the source ledger.

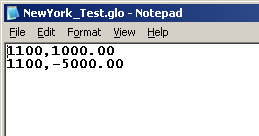

Data

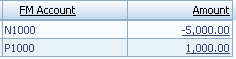

In the following examples there are two data records each with two fields. The first field is the Account (1100) which is the same for both records. The second field is the data amount. One record has an amount of 1,000 and the other -5,000.

Positive values should be mapped to account P1000; negative values to account N1000.

Conditional mapping

Conditional mapping is where you add a script to a mapping record. This means that you can control when a map is or is not applied. FDM processes the data one record at a time and holds the information for each record in an array called varValues. (Search the FDM Admin manual for varValues for more information). The data amount is held in varValues(9) so you can write a script that will map based on the value in this field:

|

Dim DataAmount DataAmount = varValues(9) If CDbl(DataAmount) > CDbl(0) Then Result = “P1000” Else Result = “N1000” End If |

Notice both amounts are converted to a double (CDbl). In the later versions of FDM you get a type conversion error if you don’t use the CDbl function. |

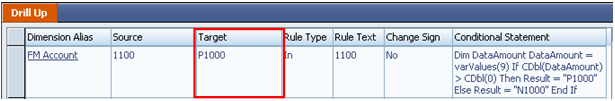

If you use the ‘Show Conversion Rules’ feature (or Drill Up), FDM will always show in the Target column the first return value in the script regardless of what is actually applied (ie it is always P1000 even when you drill into the N1000 record):

You need to go to the Validate step to see the two records mapped to separate targets:

- Simplest method.

- There is no idea of ‘skipping’ the record but you do not need to have an Else clause.

- This method looks at individual data records.

- Scripts cannot be applied to Explicit maps.

Logic Account

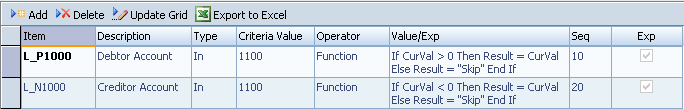

You can create a logic account that will only create a record if certain conditions are met. In this case the condition will be that the data amount is positive or negative. This is an example using a Simple Logic Group but the principle is the same for Complex:

The important difference between Logic Accounts and conditional mapping is that conditional mapping is applied to an individual source data record as it is being imported; Logic Accounts are applied after the data has been imported and records with the same metadata attributes are accumulated.

| If CurVal > 0 Then Result = CurVal Else Result = “Skip” End If |

CurVal is the result of the query. This logic account is only created if the value is greater than zero. |

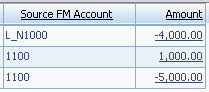

FDM looks at the accumulated values for the specified criteria (CurVal). In this case we have just specified that the source account is 1100. FDM will therefore look at the sum of the two source records (1,000 and -5,000) to get the amount of -4,000 and this is the value that is used for the creation of the logic account.

- More advanced method but still using FDM’s built-in functionality.

- If the metadata attributes of the source record are the same and you still want to map to different targets based on the data amount, you cannot use Logic Accounts because all the records with the same metadata attributes are combined. The values are accumulated and then the logic accounts are applied so in our example we only have one record with an amount of -4000,

- You might need to create ‘Ignore’ mappings for the source records.

- The record that is being loaded into the target system has been generated from the source records. It will not exist in the source ledger. It is not easy to track which records have been used to create the logic account.

Import script

You can create an import script that is applied to the Account dimension. The script looks at the data amount for the record and then updates the Account label so that records with positive data amounts can be distinguished from those with negative amounts. For example, the script might add ‘P_’ to the beginning of the Account if the data value is positive and ‘N_’ it is negative.

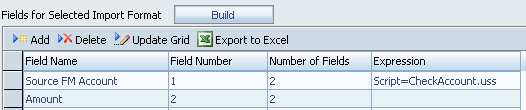

- Create an Import script (DataPump). In this example the import script is called CheckAccount (and there are other ways to achieve this):

| Function CheckAccount(strField, strRecord) Dim dblDataAmount ‘Parse out the data amount from the input record ‘If the data value is positive add P_ to the beginning of the If CDbl(dblDataAmount) > 0 Then End Function |

The script parses out the data amount from the source record and stores it in the variable dblDataAmount.

The script must set a value to the function CheckAccount. The script is applied to the account field so the parameter strField contains the account label. The script then adds either P_ or N_ to the account label depending on the data value. |

- Apply the script to the import format.

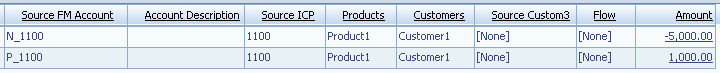

- The script updates the source account label:

- Create standard maps to convert P_1100 to P1000 and N_1100 to N1000.

- Simple scripting solution.

- The script works on individual records.

- You might want to restrict the script to execute only for certain accounts. You will need to have a method for identifying these accounts.

- You might need specialist scripting help.

- The account label has been modified by the import script and will not exist in the source ledger.

Event script

An Event script is a script that is executed in response to an event in FDM. By default Event scripts are empty and when you create an Event script it will be executed automatically by FDM when appropriate.

After the data has been imported into FDM, the logic group is executed and then the maps are applied. The script for updating the maps based on data value will execute after the maps have been applied and, in essence, will apply a second set of maps. This is done by executing a SQL statement directly on the FDM database. Before we look at the script we need to understand how FDM stores its mapping data.

Mapping table

The maps for all locations are held in the table tDataMap. If you use the ‘Export to Excel’ function for the maps this should look familiar. Take note of the DataKey field in the screenshot below. Also notice there is a second map for the source account 1100 which has a suffix of ‘NEG’:

Data table

There are 50 data tables in an FDM application by default. When a location is created it is assigned one of these 50 tables to store its data; this is called the ‘segment’. If you have 300 locations in an application, you will have roughly six locations sharing a segment. In order to retrieve the data from the database for a location, you need to know the location’s segment number. This will then give you the name of the table for the data segment eg tDataSeg4 or tDataSeg23.

Here is an example of the data segment table. Two records have been imported. Looking at the Account dimension, the source account label is held in the column ‘Account’ (1), the target account label is held in the column ‘AccountX’ (2) and the DataKey value from the mapping table is held in the column ‘AccountR’ (3):

The Event script will update the data segment table so that the AccountX column holds the updated target account label and the AccountR column will hold the datakey value for this new map record. The information for the AccountX and AccountR columns is held in the 1100NEG map record.

The script – When to execute

The key to Event scripts is understanding when to execute them. Event scripts are documented in the FDM API Object Guide. The script can be executed at a number of different places; the main requirement is that the default mapping process must have finished when the event script is executed. In this example we will use the Event ImportProcess. This event has one parameter: strEventName. You will want to execute the script only for the event name ‘EndProcess’.

The script – What to execute

In order to map the negative data records to a different target, you need to update the AccountX and the AccountR values when the Amount value is less than zero. You only want to do this for the current location, category and period.

The first step is to write and test the SQL statement that will update the record without worrying about data segments, locations, categories etc.

| UPDATE tDataSeg4SET AccountX = ‘N1000’, AccountR = (SELECT DataKey FROM tDataMap WHERE SrcKey = ‘1100NEG’ AND DimName = ‘Account’ AND PartitionKey = 751) WHERE AccountX = ‘P1000’ AND CatKey = 13 AND PartitionKey = 751 AND PeriodKey = ‘1/31/2012’ AND Amount < 0 |

This will update the record so that the account is mapped to N1000 and the correct DataKey value is stored for records where the Amount is negative. |

Once you have tested that the SQL syntax is correct, you need to look at updating the script with correct segment id, category id etc.

Update the script with the correct ids

| Segment id | RES.PlngSegKey | |

| Partition key | RES.PlngLocKey | If the location uses a parent location for its maps, the Partition key will need changing. |

| Category key | RES.PlngCatKey | |

| Period key | RES.PdtePerKey | The period key might need some manipulation depending on the date format. |

- The example is written for SQL Server. Oracle is case sensitive so DimName = ‘Account’ will need reviewing (LIKE ‘A%’).

- The example updates individual records. You can use the SUM function on the Amount field to apply the update to the accumulated value. You will also need to use a GROUP BY clause.

Completed script

The ImportAction script will look similar to this:

| Sub ImportAction(strEventName) If strEventName = “EndProcess” Then ‘Get the name of the DataSeg table. This holds the data for the location. ‘Set up the Update SQL query. This will update the tDataSeg table with strWhere = “WHERE AccountX = ‘P1000’ ” ‘Put the SQL statements together and add the check for the Amount being negative. blnReturn = DW.DataManipulation.fExecuteDML(strUpdate, RES.FDMFalse, RES.FDMTrue) End If End Sub |

- Complex scripting solution. You might need specialist scripting help.

- The script works on individual or accumulated records.

- You will want to restrict the script to execute only for certain accounts. You will need to have a method for identifying those accounts.

- The account label has not been modified by the script so drill back to the source ledger will still work.