In my previous blog I talked about Lookup Dimensions and how they can be used to simplify mapping by storing information that is relevant to a small subset of accounts. In this blog I want to talk about how to use Lookup Dimensions to store calculated data. By ‘calculated’ I mean data that is not in the source such as the Target member as a Source. See Francisco’s excellent blog for an example here. I will be going through an example of how to use a Lookup Dimension so that you can easily map based on the data amount.

Mapping requirement

It is quite a common FDMEE requirement to want to map based on the data amount. For example if the value is positive it should go the debtor account and if it’s negative the creditor account. I have blogged about this before and all of the methods had a drawback of some sort. I think I have now come up with a really simple and effective method using Lookup Dimensions.

Before I go into the detail I want to lay down the ground rules:

- Easy for the users to manage

- The map must be contained in the Export All Dimensions to text file extract

- No manipulating of the source labels

- Editable in the workspace

- Preferably not through Logic Accounts

- Minimal scripting

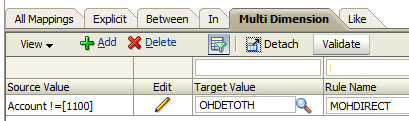

Multi-Dim maps

Up until recently my preferred method was to use a Multi-Dim map and specify that the ‘dimension’ to check was AMOUNT so something like this:

#MULTIDIM ACCOUNT=[228*] AND AMOUNT >= 0

This works really well. The main drawback is that you can’t edit this through the workspace because it does not recognise AMOUNT as a dimension – the dimension selection box is blank. This means you have to manage the maps outside FDMEE. I have always strongly advised my clients to maintain their maps outside FDMEE anyway but it would be better if the maps could be edited either way.

Method overview

This new method involves creating a Lookup Dimension and updating it with text that identifies if the amount is positive or negative. First of all I will go through a high-level overview of the steps; the detail of how to do it follows:

- Add a Lookup Dimension to the Target Application.

- Add a Field against the Lookup Dimension on the Import Format. You specify any data – it doesn’t matter. If you don’t do this step you cannot use the Lookup Dimension in Multi-Dim maps.

- Write an Event script to populate the Lookup Dimension with some appropriate text to identify whether the amount is positive or negative.

Add the Lookup Dimension

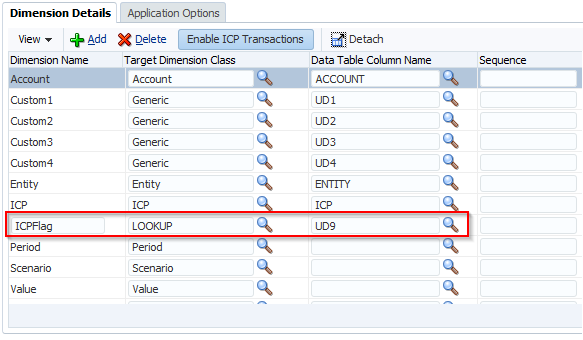

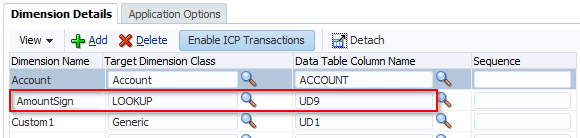

From the Setup tab select Register | Target Application. Then select the Target Application from the Target Application Summary area. Click Add and fill in the boxes. I have called my Lookup Dimension AmountSign and assigned it to UD9 (remember this – we will need it later):

Update the Import Format

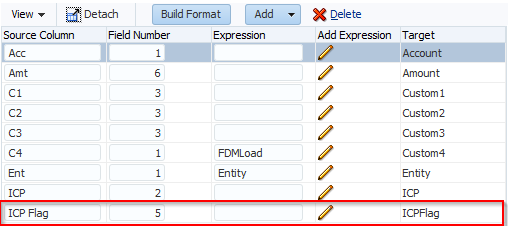

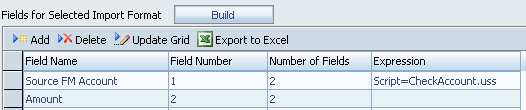

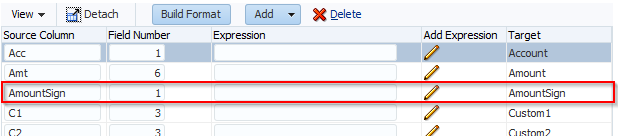

The Import Format will contain the new Lookup Dimension but it won’t have a Field assigned to it. I have assigned the Field Number 1 but it could be anything:

Event Script

Now for the bit that does the actual work. Once the data has been imported, the Event script will execute a SQL command to update the value of the AmountSign Lookup Dimension dependent upon whether the data amount is positive or negative. Notice the script needs to reference the Data Table Column Name from earlier (UD9):

# Lookup Dimension

LOOKUP_DIM = 'UD9'

# Text for a Positive number

POSITIVE_NUMBER = '+'

# Text for a Negative number

NEGATIVE_NUMBER = '-'

# SQL to update the LookUp Dimension value

SQL_TEMPLATE = """UPDATE TDATASEG_T SET %s = '%s' WHERE LOADID = ? """

# AND clause for the positive amount.

SQL_AND = """AND AMOUNT > 0"""

# Put the load id into the parameter list

lstParams = [fdmContext["LOADID"]]

# First the Negative - set everything to negative.

SQL = SQL_TEMPLATE % (LOOKUP_DIM, NEGATIVE_NUMBER)

fdmAPI.executeDML(SQL, lstParams, True)

# Now the positive so add the AND clause.

SQL = SQL_TEMPLATE % (LOOKUP_DIM, POSITIVE_NUMBER) + SQL_AND

fdmAPI.executeDML(SQL, lstParams, True)

The Event Script I selected is AftImport but there are others you can choose. Look in the Admin Guide or see here for more information on what Event Scripts are available. You will obviously want a script that is executed before the maps are processed. You will also want to add error handling and more comments.

Notice that the script first sets all records to be the negative value and then updates the records with a positive value – this is slightly quicker in terms of execution. I have deliberately put all the processing into this one script. I could have added the negative value to the Expression column on the Import Format but this would mean that if you wanted to change the positive and negative text (e.g. to P and N) you would have to go to two places to make the changes. This way everything that is needed for the AmountSign dimension is in this one script.

Import the data

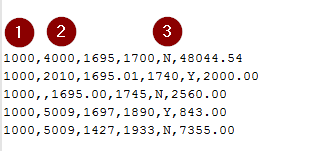

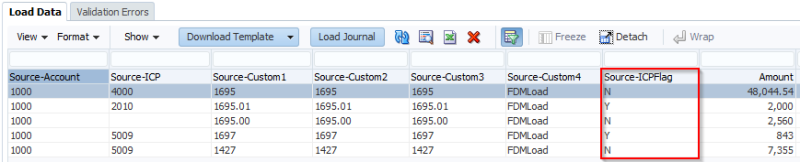

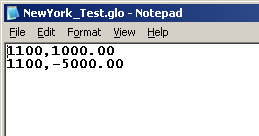

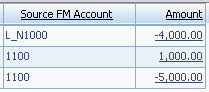

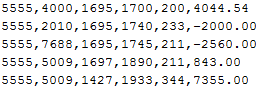

This is our source data (Account, ICP, Custom1, Custom2, Custom3, Amount):

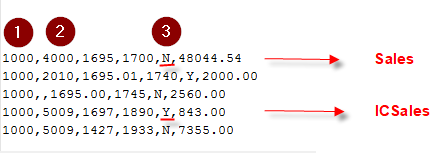

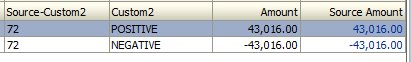

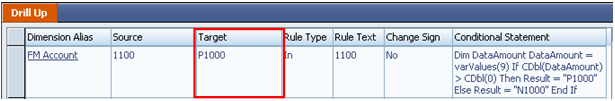

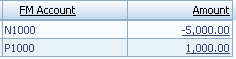

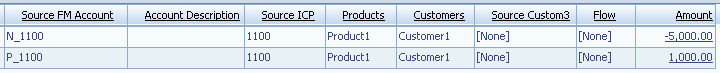

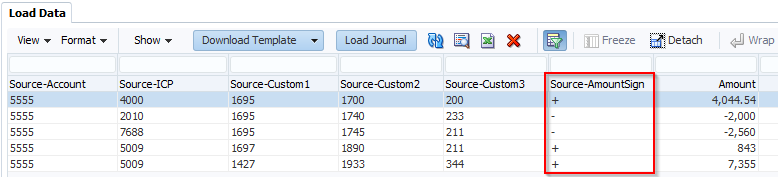

And this is how it looks after the import with the AmountSign dimension. You can see that you now have a piece of text that you can use in maps:

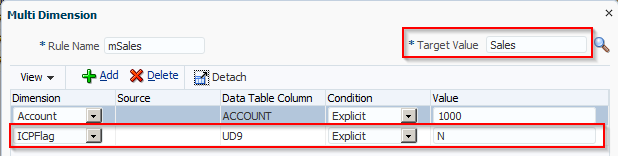

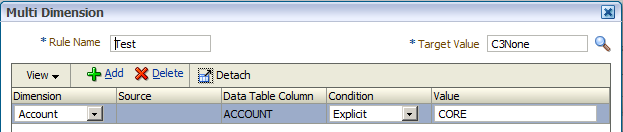

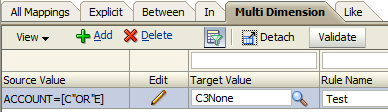

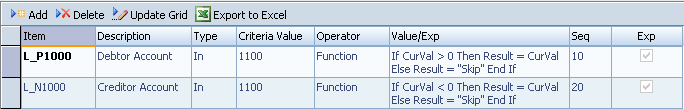

Multi-Dim maps

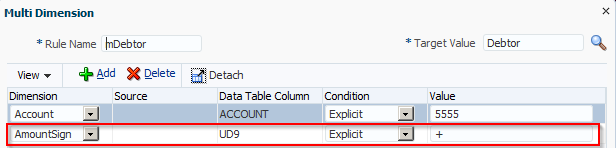

Now I can create a Multi-Dim map that references the AmountSign dimension:

This means that the users can now map by the data amount without any scripting or editing of the labels or logic accounts. And the map is contained in the Export All Dimensions.

Taking it one step further…

There are certain types of account that needs to be accumulated before they can be mapped for example VAT. Logic Accounts are usually used to aggregate source data but they have their own set of problems (difficult to trace back to the ledger, need to ignore the source records, auditors don’t like them…). SQL commands can be used to aggregate data. That means that if there is a requirement to aggregate data before applying the map we might be able to do it in an Event script.

Add a Lookup Dimension (I will call it AmountAccumSign and assign it to UD8) and add it to an Import Format as before. Change the Event Script to this (the new lines have _ACCUM):

# LookUp Dimension

LOOKUP_DIM = 'UD9'

# Lookup dimension for the accumulated amount

LOOKUP_DIM_ACCUM = 'UD8'

# Text for a Positive number

POSITIVE_NUMBER = '+'

# Text for a Negative number

NEGATIVE_NUMBER = '-'

# SQL to update the LookUp Dimension value

SQL_TEMPLATE = """UPDATE TDATASEG_T SET %s = '%s' WHERE LOADID = ? """

# AND clause for the positive amount.

SQL_AND = """AND AMOUNT > 0"""

# SQL for the accumulated amount - positive.

SQL_TEMPLATE_ACCUM = """UPDATE TDATASEG_T SET %s = '%s'

WHERE LOADID = ? AND (ACCOUNT IN

(SELECT ACCOUNT

FROM TDATASEG_T

GROUP BY LOADID, ACCOUNT

HAVING LOADID = ? AND SUM(AMOUNT) > 0))"""

# Put the load id into the parameter list

lstParams = [fdmContext["LOADID"]]

# First the Negative - set everything to negative.

SQL = SQL_TEMPLATE % (LOOKUP_DIM, NEGATIVE_NUMBER)

fdmAPI.executeDML(SQL, lstParams, True)

# Now the positive so add the AND clause.

SQL = SQL_TEMPLATE % (LOOKUP_DIM, POSITIVE_NUMBER) + SQL_AND

fdmAPI.executeDML(SQL, lstParams, True)

# Set all the accumulated values to negative

SQL = SQL_TEMPLATE % (LOOKUP_DIM_ACCUM, NEGATIVE_NUMBER)

fdmAPI.executeDML(SQL, lstParams, True)

# Clear out the parameter list because it needs to have the loadid twice

lstParams = []

lstParams = [fdmContext["LOADID"], fdmContext["LOADID"]]

# Update the records to positive where the accumulated amount > zero.

SQL = SQL_TEMPLATE_ACCUM % (LOOKUP_DIM_ACCUM, POSITIVE_NUMBER)

fdmAPI.executeDML(SQL, lstParams, True)

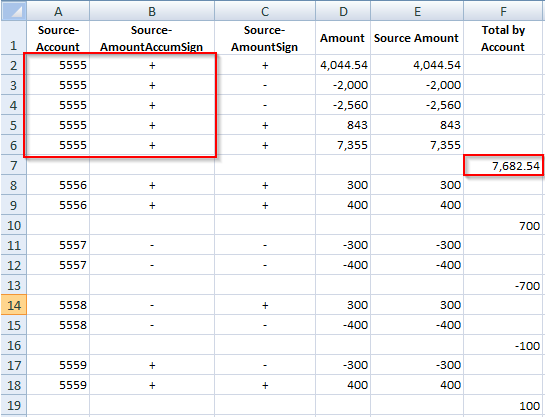

Import the same data and include some test data and this is what you get. I am showing it in Excel format so that it’s easy to show the subtotals by Account:

This works well if you are wanting to accumulate the source data by the same dimensions for all the source rows (e.g. Account and Custom1). If you want to accumulate some data by Account and Custom1 and others by Account and Custom2, then this probably won’t work for you and it’s better to stick to Logic Accounts.

NOTE: I have tested this on SQL Server and I can’t see anything that would stop it working on Oracle but I might be wrong.

Conclusion

Using Lookup Dimensions to hold the result of a SQL command works really well and for the most part is transparent to the users. They just see the result as added information. The flexibility of being able to map by data amount (and perhaps accumulated data amount as well) with little impact on the system while keeping it all in standard map format is a big win in my opinion. You can use the same principle for other requirements such as wanting to use <BLANK> in a Multi-Dim map – create a Lookup Dimension and update it to BLANK where the relevant field is empty.